Three-Phase Power or the Magic of the Missing Neutral

Electricity can seem both simple and confusing at the same time. A refresher on things like the differences between volts, amps and watts never...

4 min read

![]() Paul Bieganski

:

Feb 27, 2023 10:00:00 AM

Paul Bieganski

:

Feb 27, 2023 10:00:00 AM

Editor's note: This blog was originally posted in 2015. Although electrical definitions haven’t changed, it was updated in 2023 because the cost of electricity has increased and resources for monitoring power usage have been added.

We live in a world of electrical power. It runs our lights, heating, cooling, computers, and machinery. Consider a data center or any large power-hungry facility—they need power to function and they must ensure enough power is always available. But power isn’t free and with almost constant price increases, it’s important to understand electrical usage now more than ever. Data center managers closely track power as the cost of energy used by a server over its useful life routinely exceeds its purchase price. And most data centers spend twice that amount to cool the servers and remove heat from the facility.

Resource: Guide to Data Center Power Monitoring

Here's a quick review on the basics of electricity—Volts, Amps, Watts and Watt-hours. The information at the bottom about what it costs to do certain things with power turns this review of the basics into a guide for how to figure out if investing in power monitoring makes sense for a business.

Electricity is the common name for electrical energy. Electricity is technically the flow of electrons through a conductor, usually a copper wire. Whenever electricity flows to a device, the same amount has to come back. It is a "closed loop" system. Electrons in a wire actually move pretty slowly, not at the speed of light. Signals do travel at (close to) the speed of light.

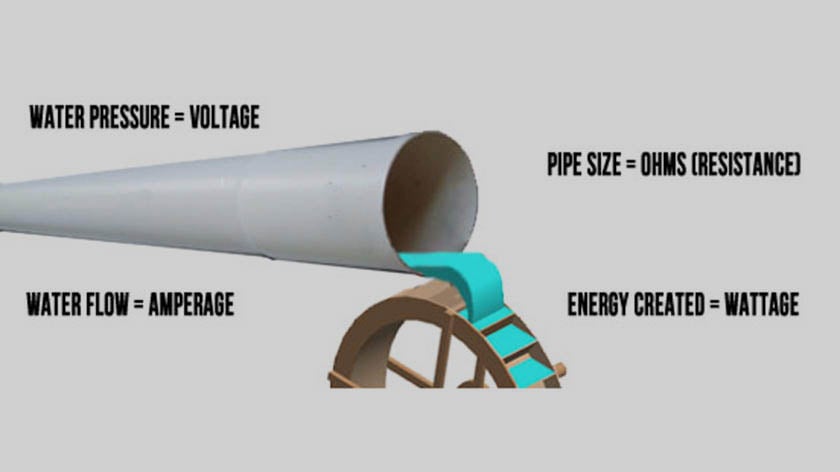

Water pipe analogy for understanding electricity

Imagine a 100-foot pipe, filled with water: when you open a valve on one end, water almost immediately flows out the other end, even though no drop of water has traveled the full 100 feet. The pressure wave, however, has traveled 100 feet.

Measured in Volts (V) after Alessandro Volta. This is the "pressure" of electricity. Data centers typically draw power from the utility grid at high voltage, usually 480V, which must then be transformed to a lower voltage for use by the IT equipment. In North America, most IT systems found in a data center use 110V, 208V, or 220V. In much of the rest of the world 220V to 240V is more common. Voltages within about 10% are used interchangeably, so you may hear the same installation described as 110V, 115V, or 120V.

Electrical voltage, just like water pressure, does not really tell you how much "work" (power) a system can deliver. Imagine a tiny tube: it could deliver water at tremendous pressure, but you couldn’t use it to power a water wheel.

Measured in Amps or Amperes (A) after Luigi Ampere. This is the "flow rate" of electricity (how many electrons per second flow through a given conductor). Current describes volume but not pressure, so on its own it doesn’t tell the full story of power.

Imagine a big water pipe: you could have a lot of water flowing through it, but the energy it carries depends on its pressure. Higher currents require thicker, more expensive cables. The main power feed to a large industrial facility could be thousands of Amps. In a data center, this gets distributed out so by the time it reaches a rack of servers it is at 20A to 63A.

Measured in Watts (W) after James Watt. This is the useful work being done by electricity. Watts reflect work being done at a given moment, NOT the energy consumed over time. Power in Watts is calculated by multiplying voltage in Volts times current in Amps: 10 Amps of current at 240 Volts generates 2,400 Watts of power. This means that the same current can deliver twice as much power if the voltage is doubled. There is a growing demand for higher voltage transmission lines in part because they make renewable energies like solar and wind more viable. Data centers are also moving towards higher-voltage configurations. Power can also be measured as "real" and "apparent" with a "power factor" that converts one to the other. Learn about power factor here.

Measured in Watt-hours (Wh). Watt-hour is the amount of work done (i.e. energy released) by applying a power of 1 Watt over 1 hour. A 100 Watt light bulb left on for 10 hours will consume 1,000 Wh (or 1 kWh) of energy.

You generally pay for power by kilo-Watt-hour (kWh), or 1,000 Wh. The average cost in the U.S. is about $.17 per kWh (that price increased by about 15% between 2021 and 2023). It is much higher in many other parts of the world. You can do the math on what your facility is spending. Here are a few examples.

First, a computer server that uses 500W running for a year will consume 500W x 8,760 hours = 4,380,000 Wh = 4,380 kWh. If you are paying $0.17 per kWh, the cost to run the server is 4,380 x $0.17 /kWh = $745 per year. This doesn’t include the cost of cooling the server which may double or even triple your overall annual cost.

Second, consider a cannabis grow facility. An electricity trade organization in Washington State estimated it takes 2,000 to 3,000 kWh to power the lights to produce one pound of product. Paying $0.17 per kWh works out to $340 to $510 annually per pound.

Finally, let’s look at electric vehicles. Electric vehicles are rated based on kilowatt-hours-per-mile and a typical number is around .35 kWh per mile. At $.17 per kWh you could theoretically travel a mile for $.06 in an EV. This sounds cheap, but if you operate a fleet of vehicles it is certainly a cost you’ll want to keep a close eye on.

There's no quiz at the end to test your knowledge. But paying attention to the basics can help avoid unpleasant surprises. Packet Power makes it easier and more affordable for critical facilities managers to track and analyze energy usage. Speak with our team to learn how we can help you with your needs.

Source: Average energy prices in the United States https://www.bls.gov/cpi/notices/2024/publication-changes.htm

Electricity can seem both simple and confusing at the same time. A refresher on things like the differences between volts, amps and watts never...

Current Transducers are a key component of every power monitoring system. If you have ever wondered why they are used or how they work, here's a...

As the demands on data centers continue to grow, space is at a premium in existing facilities. In an attempt to maximize their computing per square...